Framework for Moderation

It’s been exciting to see many new tooters on social.coop and though each of our timelines may look a little different, we are looking for ways to ensure that the social.coop corner of the fediverse continues to reflect our values and ethos for being a cooperative and collaborative space. The CWG Ops team has been hard at work at our moderation duties through this large growth phase and we have been grappling with some moderation questions.

We would like to add clarification to encouraged and discouraged behaviours outlined in our CoC and Reporting Guidelines.

In an effort to encourage and model positive and constructive modes of discourse that demonstrate a mode of online communication that can prove counter to the norms on other platforms,

we’d like to introduce nuance to moderation based on the guidelines outlined on this blog: https://wiki.xxiivv.com/site/discourse.html

In this thread we will discuss different moderation challenges and based on those discussions, will propose amendments to the CoC for approval by the membership.

Sam Whited Fri 13 Jan 2023 11:22PM

FWIW, in all of the things you're complaining about this is what we did the first time. This isn't about moderators who overreached and deleted things when they should have talked to the user first, this is about users who repeatedly ignored the rules after being politely reminded of them and being asked not to post certain content or soften their tone. These users are hurting others and we're aiming to protect them, if the user ignores us we have to take stronger actions than just asking them to consider being nicer or not posting anti-vax content or whatever the case may be.

Michael Potter Fri 13 Jan 2023 11:41PM

Aaron, I didn't get the impression that you were a right-wing type. Also, I like to think that I'm realistic enough that if I find an idea threatening, it's because it is. Anyway, I took anti-fascist from our own CoC: "Let there be no confusion, Social.Coop is anti-racist, anti-fascist, and anti-transphobic."

I see what you mean about groupthink and hard rules not always having the intended effect. Will the rules not apply to all situations, or would they condemn people who aren't doing anything wrong? I do believe that truth is generally verifiable, not philosophy or theology, but scientific truth. Certain conspiracy theories are well-known, debunked and aren't worth further discussion, imo. I don't think the bird app blocked disinformation out of the goodness of their hearts, but to protect themselves from liability. We might do well to consider that.

Michael Potter Sat 14 Jan 2023 12:49AM

Online spaces that become popular will attract trolls. The purpose of moderation is to stop them from ruining the experience for everyone else.

Aaron Wolf Sat 14 Jan 2023 1:05AM

Glad Loomio is threaded. Still, slight constriction about making sure I don't get into over-posting… I do want to clarify, trying to be concise.

I like to think that I'm realistic enough that if I find an idea threatening, it's because it is

I think a far better mental model is: "If I find an an idea threatening, I need to trust my intuitions and treat it as a threat because it's dangerous to ignore our fears." We can have that attitude while still holding more lightly to whether we are right about the danger. People in general will feel righteous about our judgments while feeling threatened. If we can later get to a state of real open curiosity and equanimity, we can review from a distance whether our fears were calibrated well. If we practice this consciously as a pattern, we can grow in our confidence. I wonder if you are recognizing enough the threat that comes from being overconfident about the accuracy of our perceptions of danger.

The model I'm proposing is one that does not support inaction in the face of perceived threats. I think it's essential that potential threats be addressed sooner rather than later. I just think we need to do the minimum to address immediate perceived danger and allow for a more patient facilitated process for how to finish resolving the situation after initial reaction.

from our own CoC: "Let there be no confusion, Social.Coop is anti-racist, anti-fascist, and anti-transphobic."

For transparency, I opposed that when added. There were similar tensions in drafting where people in a state of threat and constriction insisted on these trigger labels being added. I felt and continue to feel constriction about their presence. I read that sentence as people saying they are too scared of nuance and interpretation and felt only safe with some aspects of zero-tolerance policies, and putting that in was a compromise between that view and the view from myself and others who wanted an effective but less blunt and hard-line approach. So, I want the co-op to have moderators who use best practices and make human judgments to block harm. I don't want the co-op to say that certain debates (e.g. questions of biological sex versus socialized gender, or questions about climate science) are absolutely prohibited. I see that attitude as part of the purist trend in social media that blocks constructive dialogue and growth. I do recognize the serious risk of allowing too much subtle dangerous ideas in the name of dialogue. I want our moderation methods to empower humans to adapt the practical approaches over time as needed. Anyway, this is already way too long here.

Yes, I support any necessary efforts to protect ourselves from liability.

Aaron Wolf Sat 14 Jan 2023 1:11AM

I don't like the term "hate speech" for all these things. Advocating violence isn't hate speech, it's just advocacy of violence. Hate speech is specifically about targeting particular groups and that does not include politicians as a group. It does have legal meaning even.

The Trump example is the only one I would support without any constriction myself. Some of those might be okay hidden behind a Content Warning labeled something like "rage rant" or something. It's okay for people to express some hyperbole, especially in context. I'd like it to be acknowledged consciously and not supported as the norm of communication.

Aaron Wolf Sat 14 Jan 2023 1:18AM

This [EDIT: Sam's post, Loomio isn't threaded beyond single-level apparently, darn!] is the first in this thread to explain what the OP was referring to. I didn't even notice the issue until now. Can you just give a quick summary? I'm imagining something like: poster links to mainstream articles that are popular with anti-vaxxers even though the articles do not take anti-vaxxer position; then people complain; the posters keep doing it and ignore complaints; moderators step in and delete stuff. Is that right?

If so, this goes to my core points that I want to see: more onboarding process where new members are actively (like in conversation with some guide, a real human being) introduced to the social norms, which include making adjustments in light of complaints, not ignoring them. There's almost always some way to improve. And if someone egregiously complains too many times and is hassling others, moderators can deal with that pattern. I don't see how a community can maintain healthy norms and be welcoming without an active onboarding process.

I fully support moderators taking action if users ignore complaints. Furthermore, I wish there was a way to do something like hide a post behind a Content Warning even if the original poster didn't make one, because that is a medium action between merely asking the poster to take action themselves and just deleting their post.

tanoujin Sat 14 Jan 2023 1:27AM

Hi Matt, I'd like to look into that case if there is any documentation available - do you have a link to the evidence or related discussions?

tanoujin Sat 14 Jan 2023 1:57AM

I see your point, Aaron, but I think instead of hiding a post, the best practice would be to moderate proactively, like adding a public mod warning before a thread gets out of control, locking it and, yes, deleting toots that violate the CoC (https://wiki.social.coop/docs/Code-of-conduct.html). I take this from experience with fora, so I am not sure how to realize that in a microblogger environment though.

If you want discussion about questionable toots, you will run into dead ends offering mediation, just because (qualified!) manpower is limited and such proccesses will most probably fail if there is no commitment by the parties in conflict.

I could imagine a possibility to hand in a moderation complaint to a committee of supervisors which handles such cases swiftly backstage, but transparent to our members. Following discussions should utilize the usual channels imo. (You can see Matthew Slater trying to initiate something like that above.)

tanoujin Sat 14 Jan 2023 2:24AM

@Ana: I made good experience (elsewhere) with the purpose of keeping all posts in accordance to (don't laugh at me) the UDHR (https://www.un.org/en/about-us/universal-declaration-of-human-rights).

Just two examples for this minimal consent in action:

Matthew's "Too bad Epstein wasnt hung by his balls" -> Art.5 "No one shall be subjected to torture or to cruel, inhuman or degrading treatment or punishment."

A person tooting an SS flag without any sarcastic or ironical framing: showing a symbol of "the violent elite of the master-race" -> Art1 "All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience and should act towards one another in a spirit of brotherhood." (and a couple more).

That makes it pretty easy, right? Instead of listing what we do not want to see, we refer to the catalogue of rights we want to see untouched at minimum in our reach.

Matt Noyes Sat 14 Jan 2023 2:28AM

It would be great if mods could add CWs, as it is the user has to do that (which is good in that it requires us to convince the user that it is a good idea, but only leaves us with the option of deleting the toot if the user does not agree...)

Aaron Wolf Sat 14 Jan 2023 6:31AM

I'm confused about your reply. Hiding a post (if it were possible) is proactive moderation and is specifically about taking action before a thread gets out of control. Oh, I guess you imagined hiding to be like allowing people who already saw it to go ahead arguing and replying to each other not even realizing that it's now basically private? I didn't mean that. The hiding I imagine would be temporary disabling that just allows it to be edited and reposted (Discourse has that functionality for flagged posts, though they don't do it proactively enough and the default notice is way too harsh about shaming someone for getting flagged instead of helpfully encouraging them to edit and be gracious).

The context I imagine is one where parties commit to engage with constructive processes as part of joining the co-op and where norms about conflict-resolution and so on are repeatedly highlighted as part of the culture.

Aaron Wolf Sat 14 Jan 2023 6:36AM

I found an open issue https://github.com/mastodon/mastodon/issues/1307

@Literally@social.coop Sat 14 Jan 2023 3:26PM

Totally on a different angle, but having some constant education on how filters work will help here.

There are people who I would be happy not NOT block, but if they use a twitter cross-poster I would really not like to see the retweets - not because the content is offensive to me (it may or may not be), but because it's part of a larger conversation or thread on a different site.

If I (and others) could figure out how to filter out retweets (for example) on the home, federated, and local feeds, if some type of posts bother me I could just...not see them.

tanoujin Sat 14 Jan 2023 9:51PM

emi do:

1. <<In this thread we will discuss different moderation challenges and based on those discussions, will propose amendments to the CoC for approval by the membership.>>

2. <<add clarification to encouraged and discouraged behaviours outlined in our CoC / Reporting Guidelines.>>

3. <<...encourage (and model) positive and constructive modes of discourse (that [...] can prove counter to the norms on other platforms.)>>

----------------------

ad 1.

moderation challenges: examples?

propose amendments after discussion: texts?

ad 2.

encouraged:

inclusivity, open participation / care, consideration, respect / avoid assumptions of bad faith / constructive critique / legitimacy of resistance / authenticity / deescalation -> https://git.coop/social.coop/community/docs/-/wikis/Conflict-Resolution-Guidelines-v3.1

note: missing link on the Conflict Resolution Guidelines Page

discouraged ("unacceptable")

violence, threats / harrassment (inc sexual attention, intimidation, stalking, dogpiling) / offensive, harmful, abusive comments (insults) in rel to diversity / advocate, encourage aota

Reporting guidelines https://git.coop/social.coop/community/docs/-/wikis/Reporting-Guide/Reporting-Guide-v3.1

note: how to / procedural work in progress paper: missing image, missing link to CMT (?) for appeals.

ad 3. note: I see this realized in the spirit of the conflict resolution guidelines

Suggestions:

Moderators, please deliver examples of the challenges you are facing. Let us try to see how your examples relate to CoC, Reporting Guidelines, Conflict Resolution Guidelines. Let us quote text passages that we want to improve or complete, make proposals and discuss.

Questions:

is there any documentation of moderation we can use here?

@emi@social.coop: does the Community Working Group have anything in the pipeline yet? What grappling with moderation questions did happen? How can the plenum support you?

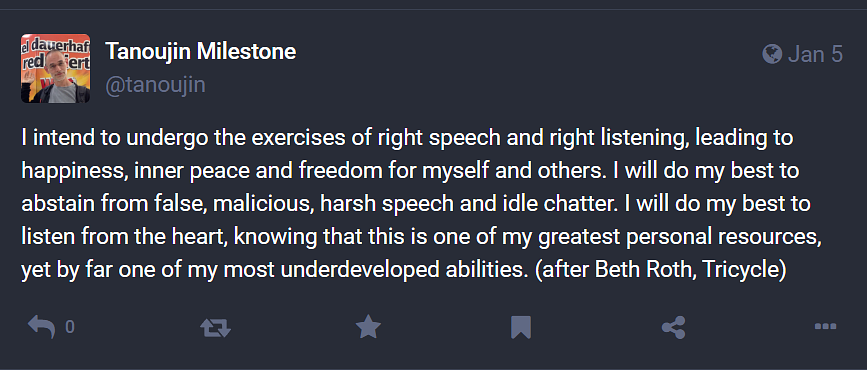

tanoujin Sun 15 Jan 2023 5:55AM

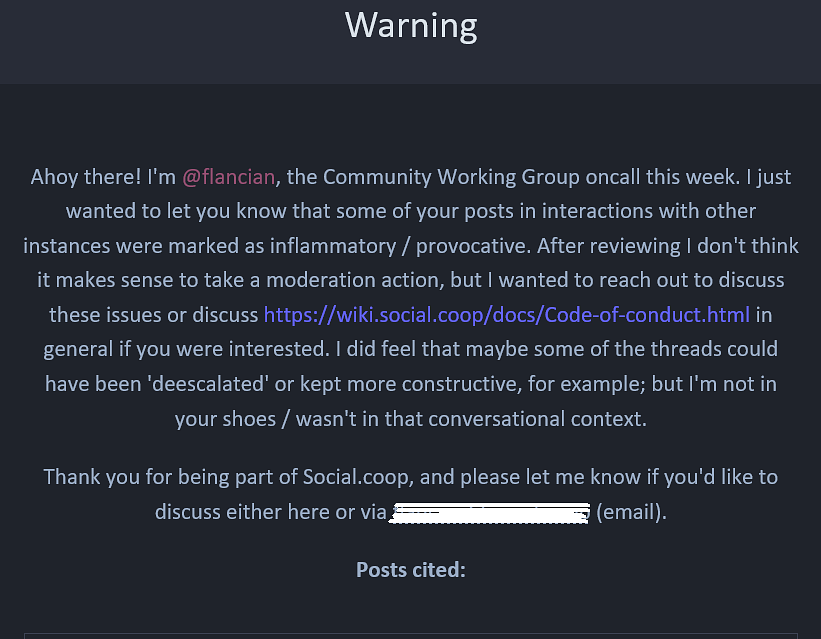

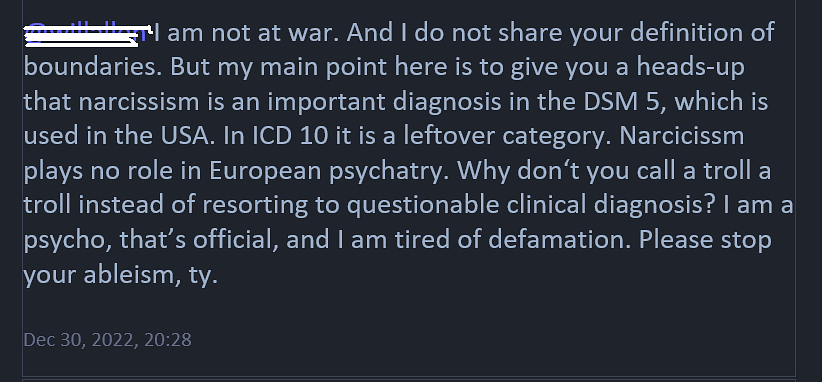

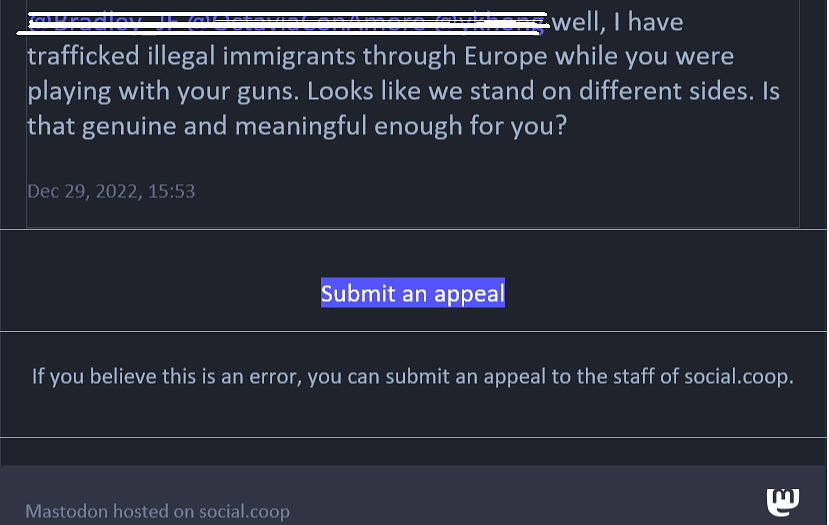

Becoming aware we are running into permission issues here, I am providing my moderation experience with Flancian. Good job, @Flancian, thank you again! Let me take the opportunity to invite others to share their moderation experience for constructive discussion if and how the CoC, Reporting/Conflict Resolution Guidelines should be amended.

Doug Belshaw Sun 15 Jan 2023 1:20PM

I'm not sure there's much point in my adding my opinion to this thread, other than to say that I've historically blocked several people who have replied here due to their posts flouting any Code of Conduct worth having.

Michael Potter Sun 15 Jan 2023 3:46PM

That is very interesting.

Aaron Wolf Sun 15 Jan 2023 9:34PM

Interesting. For perspective when reading my question below, note that these cases are all new to me, I've barely posted at all or read anything (as in I actually go to or use social.coop a couple times a month at most, though I plan to ever participate more in the future), so I'm sure I haven't seen any of the relevant toots.

@Doug Belshaw Since you brought it up, can you tell us whether you did anything other than blocking? So, did you consider or were you aware of any options besides blocking? Was there any way in which anyone (you or others, moderators or not) did anything in the direction of consciousness-raising or feedback so that the members in question would have any chance to even know how others like you were reacting to their posts?

Doug Belshaw Mon 16 Jan 2023 6:27AM

Yes, Aaron, blocking is a last resort for me, and I usually mute. Sadly, in the last few months I've had to mute many people on this instance.

Aaron Wolf Mon 16 Jan 2023 6:58AM

Since the purpose of this thread is figuring out whether improvements can be made to conflict resolution and moderation process and policies, can you describe generally what your other efforts tend to look like and how they tend to go?

Doug Belshaw Mon 16 Jan 2023 8:24AM

No, especially given you're one of the people I've historically muted. I find it inordinately tiring to (endlessly) debate this stuff, and find requests to explain why I'm opposed to what I believe to be anti-vaxxers and Nazi enablers very frustrating.

This is how good instances turn into bad ones IMHO; I'm one step away from quitting social.coop ( http://social.coop ) for the second time.

Will Murphy Mon 16 Jan 2023 3:01PM

I did not see the posts in question, but I just looked up Jem Bendell's writings on COVID and this is definitely someone with a history of misrepresenting data to downplay the efficacy of vaccines.

From https://jembendell.com/2022/10/09/theyve-gone-too-far-with-the-children-so-what-do-we-do/

UK data from the Office of National Statistics for the year until the end of January 2022 showed that confidence about the effectiveness of vaccines against hospitalisation and death was premature. Although being doubly vaccinated reduced likelihood of death for most of 2021, after Omicron arrived the death rates in the doubly vaccinated but unboosted rapidly grew to higher than in those who had never been vaccinated (across all age groups). This was graphically represented by the ONS until early April 2022 when they removed the graphs, so we can see them using the wayback engine for their website at the end of March 2022. Is this an anomaly? Official data from USA finds something similar. Vaccinated Californians had a higher rate of hospitalizations (severe illness) than those who were unvaccinated but had prior immunity from a past infection. The government did not do that analysis of its own data, but it is easy to do for yourself. That is enough reason for us to keep watching this issue – and one of the best places to observe this issue is Australia, because it still collects and releases decent data.

This is a classic data misuse technique where you start with one claim "confidence about the effectiveness of vaccines against hospitalisation and death was premature," change the topic slightly to a different question, "Vaccinated Californians had a higher rate of hospitalizations (severe illness) than those who were unvaccinated but had prior immunity from a past infection," and then cite data that actually refutes the original claim as if it supported it.

The latter citation leads to this graph, which shows that vaccination provided 6-8 fold protection compared to unvaccinated in the Fall of 2021. Yes for the Omicron wave specifically (but not any previous wave, BTW), those who had previous suffered COVID did enjoy similar and likely even better protection compared to those who were vaccinated, but that's a different claim. That's the question of "are the vaccines more effective than previous COVID infection" which is entirely different from the stated claim of "the effectiveness of vaccines against hospitalisation," which the cited data clear show they were.

Also consider the conclusion of the article that was cited:

What are the implications for public health practice?

Although the epidemiology of COVID-19 might change as new variants emerge, vaccination remains the safest strategy for averting future SARS-CoV-2 infections, hospitalizations, long-term sequelae, and death. Primary vaccination, additional doses, and booster doses are recommended for all eligible persons. Additional future recommendations for vaccine doses might be warranted as the virus and immunity levels change.

So my point here is that @Matthew Slater 's comment frames it like it is a foregone conclusion that the removal of Jem's posts was an undesirable moderation result, but based on this history I don't think we should accept that framing.

Matthew Slater Mon 16 Jan 2023 3:45PM

Thanks for looking into this Will.

I think it is perfectly acceptable to cite data in an article which draws different conclusions from the data. I've seen papers which draw wrong conclusions from their own data because they are only written for the headlines.

I see how Bendell makes one claim and then switches to a closely related claim, but he says what he is doing and why - because the UK stopped publishing that data. I don't see what is being misrepresented here, and your assertion of 'misinformation' seems very strong. I know Bendell very well and I know that he acts in good faith and professionalism.

I would rather be confused and undecided about these technical matters than have them filtered for me by people who, for all I know, might have different standards of truth for different opinions. Wouldn't you agree that claims for vaccine efficacy were criminally exaggerated in the early days and hence siding with the skeptics was/is quite a reasonable course of action?

Aaron Wolf Mon 16 Jan 2023 4:10PM

Doug, I don't know what conflicts we've ever even had, and I'm barely active on Mastodon. I would really love to somehow clarify misunderstandings (or whether even you are mistaking me for someone else). I'm struggling with plain text to guess how much I could even say effectively. I take it you feel exasperated right now, and I just want to express my sympathies.

I deleted a longer reply I first posted. It's for a discussion between people willing to discuss. Would you please clarify whether you are at all open to discussing here on Loomio what we as a co-op can do to improve the experience you are having in this community? I don't want to add to your frustration by presuming your willingness. I appreciate you for sharing your thoughts and feelings here.

To just point out one bit of clarity for anyone reading: I was not in any way asking for explanations about objections to vaccine-skeptics etc. I was asking whether people are doing anything besides muting, blocking, or replying. The things suggested by the conflict resolution guidelines (https://wiki-dev.social.coop/Conflict_resolution_guide ) are private communication to posters (not to argue about the topic but to express feedback/requests about the conduct issues), asking for help, and filing formal CoC report. I think we can expand that with more specific guidance and other options. So, my question was whether people are only muting, blocking, replying, or nothing. Are people asking for help and reporting issues, and if so is that not working well?

Michael Potter Mon 16 Jan 2023 5:05PM

I saw that in Jem Bendell's posts, too, and this is exactly the kind of thing I'm talking about. To be clear, I think the document Emi initially posted is terrific, and it's a great resource in a small group of people who are committed to enlightened interactions. Unfortunately, this group is already too large for that and we already have "challenges," so that document is like the obelisk at the start of Kubrik's 2001.

We need a more complex protocol. The question is, how do we determine if a person is willing and able to build a bridge? Frankly, I looked at Jem Bendell's posts, one of which included a link to Rumble, and I was pretty sure I wouldn't be able to build a bridge with him, or people like Mathew Slater who are shilling for him. We'll just end up going in circles and waste time.

I think a sound policy, or the beginning of one, could be to check posts against resources like this:

https://realityteam.org/factflood/

If a post is demonstrably false, already debunked, etc, I think moderators need to be able to issue a warning, one warning only or we risk a bad actor stringing us along. Then, moderators delete the post (or add a content warning, if that's technically viable and appropriate). If there are repeated offenses, then the person gets kicked out of social.coop, as in excised, out the airlock. This would send a very clear message both to people testing to see what they can get away with, and to regular users who might feel that this site isn't moderating effectively.

Aaron Wolf Mon 16 Jan 2023 5:16PM

Strong agreement. We need to be proactive at blocking harms right away. Then, we can have processes for restoration/resolution etc. once it's clear that contentious risky things are limited. Otherwise, we lose people and the community degrades. What I want to see is the enlightened-small-group-ideals etc all in place for what happens after the initial stop-harm actions.

So, newcomers should find out right away that they do not get away with almost anything problematic. However, we don't then just feel done then. We then want to onboard people with human connection and understanding and support people in becoming constructive co-op members and building skill at discussing controversial things with due care and sensitivity.

So, it's a yes-and approach I'm suggesting, not a dichotomy of passivity vs blunt blocking as the only choices.

Graham Mon 16 Jan 2023 5:38PM

I've been vaguely paying attention to this discussion for a while, and haven't wanted to get involved, beacuse it's not my field, but it feels like things are getting a bit crazy in here right now. To suggest that someone like Professor Jem Bendell is in some way a 'bad actor' seems nonsensical to me. He's a respected academic who, as far as I'm aware, is focussed on an objective and rigorous scientific approach. And to use pejorative language to describe Matthew Slater, someone who I know and have a lot of time for, indicates to me that this debate may be losing its grip on common sense.

Yes, we all want social.coop to work well and be successful, and that means having a useful policy framework that can help moderators to do their work fairly and in the spirit of cooperation and tolerance in which I hope this place is founded, encouraging users in turn to be cooperative and tolerant. If the intent is instead to ensure that social.coop stops people like Jem and Matthew from contributing then I fear that cooperation and tolerance are long gone. And if that's the case, then I will most likely go too.

Ana Ulin Mon 16 Jan 2023 6:34PM

It is January 2023. We are no longer "in the early days", and the context and data around Covid vaccines and the associated discourse has vastly changed.

Ana Ulin Mon 16 Jan 2023 7:33PM

Lest anyone think that @Doug Belshaw is an outlier, I'll say that I, too, have recently been forced to start muting and blocking folks in the social.coop timeline, and have started to strongly consider moving instances. social.coop has never been perfect, but it feels like we are not trending in the right direction.

I made an attempt at engaging on this thread early on, wanting to try to give Matthew Slater the benefit of the doubt. It's clear to me now that he is a right-wing troll -- "anti-woke", in his own words (for anyone who might want some context on the term, see e.g: https://www.theguardian.com/us-news/2022/dec/20/anti-woke-race-america-history).

Since this thread, despite being titled "Framework for Moderation", actually seems to be about Matthew's complaints on moderation of Bendell's posts, and since I do not think that trolls can actually be appeased, I shall do my best to stay away from this conversation from now on. (Pray for me, y'all.)

But perhaps we do need a separate, serious discussion on what is our collective stance in the face of such members.

Much love to the folks of the CWG, who have to deal with this shit all the time. 💗

Aaron Wolf Mon 16 Jan 2023 7:42PM

@Matthew Slater said:

Wouldn't you agree that claims for vaccine efficacy were criminally exaggerated in the early days and hence siding with the skeptics was/is quite a reasonable course of action?

That's not an assertion that we are in the early days now. He was describing an opinion about what was the early days (i.e. the very beginning of vaccine availability in late 2020 and early 2021).

I happen to not agree with the ironically exaggerated language of "criminally exaggerated". The public messaging around covid was indeed exaggerated, flip-flopping, problematic, hypocritical… and the best views I found were from people like Zeynep Tufekci. The hyperbole of "criminal" to describe the tragedy of incompetent public messaging seems unhelpful though.

Anyway, the key point IMO is that the co-op should not take a hard-line position on discussions of vaccines but should take some sort of hard-line position on use of triggering and provocative language around identified sore-spots.

We could still make a democratic decision that vaccine-skepticism is too much of a landmine and so we simply ban it as a topic despite acknowledging that doing so is an unfortunately blunt decision. I would personally prefer that we mark certain topics like vaccine-skepticism as "sensitive" and require extra care such as that all mention of identified sensitive topics get specific CW's and hashtags and avoid provocative language.

In short: there are all sorts of policies and tools that fit different levels between unhealthy tolerance (i.e. allowing harms to the community, such as turning away lots of people) and zero-tolerance.

Aaron Wolf Mon 16 Jan 2023 7:52PM

I believe reactions like yours and Doug's are typical and are representative of the harms caused by allowing problem posts to keep going. The top priority IMO is to take immediate action to make sure people like you and Doug (and so many silent others) feel welcome and reassured enough to stick around.

Making sense of how fair it is to judge Matthew as a right-wing troll has to come later (and ideally wouldn't be asserted like this if we can cut off initial tensions sooner, so you don't feel exasperated). The story in my mind is that defensive frustrated people are more quick to judge and that supposed trolls also dig in defensively when threatened, labeled, and targeted as the problem (ironically escalating the conflict in their defensiveness). Incidentally, I have no reason myself (in what little I've seen) to think anyone on any side of this is here in bad-faith. I don't believe that can be effectively discussed at all while people are "hot" with immediate reactions, feeling constricted and defensive. If someone really is persistently toxic, we will still see that when we are calm and reflective.

We need ways to cut off the immediate conflicts in order to then deescalate and figure out later what to do when we're more relaxed. We need ways to temporarily block things while expressing to everyone that it's about addressing the immediate tensions and that we have a due process to help everyone willing to work on being constructive and accommodating co-op members.

Matthew Slater Mon 16 Jan 2023 8:34PM

I called this discussion in hope of tightening up the moderation guidelines and I have been dismissed, by name, for the first time in my life I might add, as shilling disinformation, as anti-woke, and a right wing troll.

Let me remind you, I haven't abused or insulted anyone here. I've listened and been polite at all times. Yesterday on the site somebody accused me of 'spreading misinformation' and then blocked me. IRL that's called slander, and talking behind someone's back.

There's nothing more I can do here. It feels the worst parts of pre-Musk twitter, with a veneer of cooperative righteousness, which I find ugly. So this is goodbye. A troll leaving of his own accord. Right.

Matt Noyes Mon 16 Jan 2023 8:42PM

Thanks, Aaron, I think this is helpful. I also hope people will cool down, take time away if needed, and then think about how we can help improve our moderation and, more importantly, the relationship-building that builds trust and patience.

Lynn Foster Mon 16 Jan 2023 8:52PM

I truly hope that social.coop does not turn into a place where someone's ideas of political correctness and scientific correctness beat out actual science and respectful political discussion. We have twitter for that.

We so badly need social spaces where the harms of capitalism don't basically control the discourse. It looks from where I sit that a lot of the US mainstream media is under substantial control by capital in the US, and this is true in many other countries too. So for example I don't consider the sources on the list at https://realityteam.org/factflood/ of "the most reliable national news sites" very comforting. In journalism, as well as in public health, we have some crazy mix of non-bought people in all positions trying to do the right thing and provide useful information, vs. people in positions of supposed public trust who will lie to beef up their stock portfolio, politicians who will lie to satisfy their biggest donors, people who have to lie to keep their job, etc.

I also think we need to allow people to develop informed opinions and decisions; and raise our levels of critical thinking ability, in safe spaces. I find the level of "I am right and I will protect people from you and protect you from other people" making an appearance above to be somewhat scary in the way that our society's control of "truth" is scary now. In the way that the McCarthy era in the 1950's in the US was scary.

I'm not an expert in moderation. And it is a thorny issue. But as of now, having read through the relevant conversations of the last days, I would agree with the position that moderation should address speech that is directly aimed at hurting people in some way, or inciting hurt, or even just mean and disrespectful. I don't think we want to, or even can given the resources and level of governance it would take, moderate for what is actually science, especially when presented respectfully with links to studies. It's a moving target by its nature (the scientific method), it is co-opted in so many ways, and more information is good.

Also, fortunately, people can follow, mute, block who they want. That seems to me like a perfectly OK way for people to manage their social networks.

To emphasize, this is my 2 cents as a person who does not have moderation experience, and does not often run into disrespect because my networks are small and I spend less time on social media than anyone I know. I could be wrong about some of the above, but I don't think I'm wrong about the danger that "I will control what (respectfully delivered) content you can hear" presents in this stage of capitalism.

Will Murphy Mon 16 Jan 2023 9:25PM

Hi @Matthew Slater can you confirm whether you are a currently social coop member? Your mastodon profile page says you have left the coop and I couldn't find you among the contributors on Open Collective

Aaron Wolf Mon 16 Jan 2023 9:32PM

I have no other knowledge, but maybe that's because he quit? As he posted here 50min before your question

Will Murphy Mon 16 Jan 2023 9:36PM

Ah thanks, I missed that comment.

Matt Noyes Mon 16 Jan 2023 9:39PM

Hi folks, look, we here on social.coop have been buffeted by a couple of big waves. First, Covid, with all the trauma (lives lost, physical damage), anxiety, fear, confusion, overwork, etc. that comes with it. I would be a liar if I didn't say that three years of constant concern hasn't taken a toll on me. Not to mention the mental labor of sorting out my understanding and response to changing information and policy in a highly charged atmosphere. And the toll of physical isolation.

Second, the recent influx of former/continuing Twitter users, who often bring with them a culture of anger, defensiveness, contempt, provocation-outrage, etc. (along with some great content and insights!) The first wave of Twitter users in 2018, and the reactions of existing members, nearly destroyed SC, but ultimately led us to make crucial improvements and build a better culture.

Moreover, we have grown rapidly -- this influx is much bigger -- and are finding our existing protocols and organization practices tested. Changes are needed, but I think our current Code of Conduct and Conflict Resolution Guidelines are both "good enough for now" and "safe enough to use." The immediate challenge we face is what to do when members refuse to follow the guidelines (e.g., by escalating) and reject the efforts of community working group ops team members to encourage good behavior and facilitate conflict resolution.

So far, our experience on the CWG ops team has been that people are usually willing to engage with us and take steps to resolve conflicts, even when they think we are wrong, for example by using content warnings or editing a post. People routinely post about controversial subjects and others decide if and how to respond, including by muting/blocking, if they wish. But, in the case of Covid vaccine related content, this hasn't been the case. That's why we on the ops team are looking for additional approaches and guidance. To that end we opened this discussion, knowing that there was a risk of further conflict and loss of members. I think there is a lot of useful content in this thread.

Soon, in the next couple of months, we need to hold elections for the CWG ops team, so maybe it would be good to have some live sessions to introduce the work involved and help new people come in. We have encouraged people to join our meetings to see how what we do and how we do it.

Scott McGerik Tue 17 Jan 2023 1:23AM

I'm looking for suggestions for trainng/learning materials on moderation, de-escalation, conflict resolution, etc. I admit to being in over my head on these topics but I want to contribute to and support the community.

tanoujin Tue 17 Jan 2023 6:37AM

There would be so much to say on multiple levels - I just wish everyone all the support and care they need beyond this little forum to process the emotional stress such confrontations can individually cause.

@Matt: "The immediate challenge we face is what to do when members refuse to follow the guidelines (e.g., by escalating) and reject the efforts of community working group ops team members to encourage good behavior and facilitate conflict resolution."

From my POV and recent experience you are doing enough to offer discussion about problematic toots. Right now you are going public with some of your routines, and that is an important second step.

Usually there is one moderator in charge for a specific case and handles the communication, while consulting their peers if needed. As far as I know, the conflicting user is confronted with an ad-hoc group decision at a certain point of escalation and more drastic one-sided measures are taken. After that policing operation the reprimanded user has an option to appeal (never encountered this on either side of the show), there will be a revision and a final decision. So far so good.

Since you are an elected member of the democratic legitimated moderation team and there is a code of conduct which was self imposed by the coop members in their current version, you have the mandate to interprete and enforce the rules to the best of your knowledge.

Mistakes might be made, a learning process takes place, possibly there could be efforts to make this more transparent to faciliate public control and the development of merit and trust.

So, what to do if a member does not cooperate? I am a Newbie, and I was not aware of the scope of the contract I was entering. This changed very quickly and we had our handshake. I was corrected, and I changed my course - to something better I was used to elsewhere.

If a member does not cooperate, you will explain the consequences, and if this does not help, you are going to boot them. That is the burden of your office. The reactions of the witnesses may be mixed, you are facing consequences yourself by no later than the next elections. So you will try to do your best, if not anyway out of principle.

If this discussion here works out as intended, you will not have a handful of factions beating each other up, but a consensus born out of compromise.

To discuss Covid is a quagmire. There is less light than we wish for, hopefully we will know better in some years. But now we are in the midst of it, albeit this is not the topic at hand.

I understand you need more appropriate directives for your work.

Can we agree to add something like:

Inacceptable: false claims, abuse of scientific sources, etc.

Discouraged: pretence to belong with established disourse communities

Encouraged: adhering to the standards of academic writing in case you are presenting, interpreting or questioning scientific topics.

Mind you, I do not attribute any of this to preceding toots, it is just about what we ask of a toot to qualify. I suggest to keep it on that level, even if some review takes place here.

This might alleviate discussions about toots and your work in the future - which seems urgently necessary to me.

Edit: I will refrain from posting for the rest of the week. Thanks for your patience.

Sam Whited Tue 17 Jan 2023 12:03PM

For what it's worth, we have tried to give them every benefit of the doubt (long past what was due, IMO). They don't have to agree with everything in the social.coop code of conduct, or the spirit in which we enforce it, but these two have repeatedly argued with the moderators, refused to make changes, re-posted things we've deleted that other users have reported, etc.

When we say "use a content warning on this type of post please", the answer is to either appeal it to the community or just say "I disagree, but yah, sure, for the sake of the community who didn't like it I'll start doing that". Attacking the moderators, refusing to follow up, or just re-posting it, etc. is a problem.

I'd also like to caution us all in this thread a bit: let's not feed the trolls and turn this into arguing over whether COVID Is real or not; the point isn't whether the thing you were moderated for is correct or not, and that's going to make this discussion less useful. While I personally think we should simply ban posting anti-vax stuff, that is not the problem here. The problem is how they responded to the community and, after escalation, the moderators asking them to stop.

J. Nathan Matias Tue 17 Jan 2023 3:02PM

Hi @Scott McGerik, thanks for raising this question. I'm not aware of any trainings, unfortunately. A few folks in our research lab, which specializes in working with communities on moderation questions, has been brainstorming whether to co-design with communities a "summer school" of short weekly session that are accessible to folks who want to do or already do this work, even as volunteers. Would be interested to hear more about the demand for it (outside of this thread would be best I think).

Christine Wed 18 Jan 2023 12:07PM

Because the goal of the thread is to propose updates to the code of conduct, it would be very helpful if someone from the community working group could clarify which problems they are trying to solve with the update. Apparently one problem is users ignoring content moderation actions, but it's not obvious how the guidance in this piece would mitigate an enforcement problem. It also sounds like there are other challenges that content moderators are grappling with, but normal users do not necessarily know what those are. With more complete information, we could provide more relevant feedback.

Aaron Wolf Fri 20 Jan 2023 4:01AM

[edited from a similar post I made on Matrix] I want to apologize for some of my confusion and overly-long replies here. I think I now better see some of the issues.

The key point: the CoC does not have anything adequate in it to address disinformation. I am wary about how it could possibly be done, but we have to have some capacity to defer to the CoC in removing harmful disinformation.

I have been overly focused on conflict-resolution (and too compulsive in my unsuccessful efforts to help resolve immediate tensions). I still think conflict-resolution and various intermediate forms of moderation are essential and that we clearly have some missing processes and norms for those. That does not in any way reduce the need to update the CoC. We need both.

The link in the initial post here was all about conflict-resolution and healthy communication, so I focused on that, thinking that was the topic. But the covid-debate clearly brings up the need to support some sort of CoC around disinformation. How can figure out some CoC wording that supports the CWG in moderating such things without being too blunt and too deferential to whatever the dominant mainstream view of topics happens to be?

Bob Haugen Fri 20 Jan 2023 1:31PM

I think some of the current information/disinformation controversies, including those that MatSlats and Jem Bendell have been involved in, are lose-lose arguments. For example, vax vs anti-vax. The official maintstream views of these topics have lost credibility with many people, because the official sources, including the US government, have lost credibility. And many of the "alternative" sources are unreliable, too.

So if the moderators allow these topics, they could take over the space, and drown out all other topics, because many of the arguers are passionate about their opinions, often on both sides of the issue.

So I think it might help to create an alternative channel for such arguments. I don't know exactly how that would be done, might need to be a centralized Mastodon site where moderators can redirect such topics.

Alternatively, the moderators could offer an easy way to filter out aguments about such topics. Like, I would immediately filter out vaccination, vax, and anti-vax.

This is an alternative to banning the people. Both MatSlats and Jem Bendell do good work and provide very useful info on other topics and I follow both of them and do not want to see them banned.

Sam Whited Fri 20 Jan 2023 1:44PM

This is also a separate conversation that's worth having, but I'd also like to stress that they're redirecting the conversation this way and we shouldn't allow it to be redirected. The problem with these two users in particular isn't just that they posted content that the moderators think is harmful and should not be allowed on the instance, it's the way they responded to us reaching out to discuss it (by ignoring what we told them and re-posting things we'd removed, hurling abuse at us and other users, etc.) Even if we accepted for the sake of argument that their content was okay, we still need a good framework for moderation and some sort of consequences for creating an unwelcoming space for other members of the co-op.

EDIT: to clarify, because I think I confused myself when writing that, what I'm saying is that they violated the CoC as it stands today and continue to do so with their aggressive behavior, regardless of the specific content.

Bob Haugen Fri 20 Jan 2023 2:02PM

@Sam Whited thanks for the quick response. So your comment is about behavior about moderator decisions, not the topics themselves. But...

they posted content that the moderators think is harmful and should not be allowed on the instance

...was that anti-vax stuff? (Just to see if I am understanding the situation...)

Sam Whited Fri 20 Jan 2023 2:04PM

That's correct; I just want to make sure that we don't get too deep down the rabbit hole of litigating whether vaccines are real or not when the real problem that needs to be addressed in the CoC is how moderators deal with bad behavior. Although, this was not actually the thread I thought it was (oops!) so maybe both are worth discussing.

Bob Haugen Fri 20 Jan 2023 2:18PM

@Sam Whited and all: I think a framework for moderation needs to have a graduated range of methods for dealing with controversies, from polite requests not to do that, to allowing people to easily filter out stuff that they don't want to clog their timelines, to deleting posts, to banning people.

And also methods for people to appeal moderator decisions.

Sam Whited Fri 20 Jan 2023 2:29PM

Indeed. My first step when someone reports something like this (for users on our server, users on other servers I generally just skip to a limit or a ban depending on how bad the content is, and of course if the content is extremely harmful I might skip ahead a step or two depending on what it is) is to to first ask the reporter if they're comfortable discussing it with the person that was reported, if not I delete the posts in question, then reach out to the user and inform them that this was potentially a CoC violation and ask them not to do it again. Normally, that's enough. However if a user gets aggressive, or continues posting the same stuff, I think limiting their account is a good idea (existing followers can still interact and you can search for that user and find them, but they don't show up in the timelines of others and their posts don't show up in search results unless you're searching for that particular post, eg. by typing in its URL).

If the posts are extremely harmful a provisional limit (or a ban if we don't even think the people following them will want to see the stuff they're now posting) that lasts until the moderation team can discuss it is probably the way to go. If it's an outright ban from the co-op that we think is the only solution, I assume we'd have to bring that up with the broader membership for a vote.

At any time the user could of course go on Loomio, post in our group to discuss it with the all the moderators, or even bring it to the attention of the full community who can probably override us if we overstep.

Will Murphy Fri 20 Jan 2023 5:27PM

Thanks @Sam Whited this comment is really helpful to me - understanding now the goal of this thread is frameworks/policies for the individuals' behavior in response to the Mastodon moderation action. I was thinking of "moderation" only the the sense of Mastodon post moderation.

Do you think a CoC addition about respecting CWG decisions would help? For example, under "3. Encouraged Behaviour. I will..." add:

Honor the decisions of the elected CWG and use the CWG election process as the means to address and resolve disagreements on moderation policies

Steve Bosserman Fri 20 Jan 2023 5:54PM

"So I think it might help to create an alternative channel for such arguments. I don't know exactly how that would be done, might need to be a centralized Mastodon site where moderators can redirect such topics."

Maybe Bob's notion of offering pathways to alternative channels for various topics and participants is what Sam referred to, in part, as a topic for a separate conversation. To me, the concept of the fediverse suggests that everyone can find a place to have meaningful exchanges with others who share similar interests without hijacking the entire community. If this makes sense, then how to get topics and the people who want to talk about them into places where they can do so safely would be an interesting question to pursue.

Sam Whited Fri 20 Jan 2023 6:12PM

That seems sane to me (maybe with a mention that they can always bring it up with the community if we've overreached).

That being said, while I don't think it hurts to add more examples of things that we think are bad behavior, I also think we need to avoid falling into the trap that the trolls set of "well it's not explicitly laid out in the CoC therefore what I did wasn't a problem and you can't do anything about it". Just because the CoC doesn't mention a specific behavior doesn't mean we can't enforce the general spirit of the CoC.

Will Murphy Fri 20 Jan 2023 6:34PM

That being said, while I don't think it hurts to add more examples of things that we think are bad behavior, I also think we need to avoid falling into the trap that the trolls set of "well it's not explicitly laid out in the CoC therefore what I did wasn't a problem and you can't do anything about it". Just because the CoC doesn't mention a specific behavior doesn't mean we can't enforce the general spirit of the CoC.

Very much agree - CWG should be empowered to remove content that is, in their judgment, harmful to the community (whether that be by chasing off members, risking defederation, or other measures). In a way that's covered by 4.5 "[I will not] Make offensive, harmful, or abusive comments or insults, particularly in relation to diverse traits (as referenced in our values above)," but I'd wholeheartedly support the addition of distinct bullet point on the topic.

Bob Haugen Fri 20 Jan 2023 6:43PM

I would also support some moderation guidelines about what is and is not suitable content for social.coop, which was originally created for social conversations about cooperatives.

For example, I would support ruling out some kinds of topics that are just argument-bait, like vax and anti-vax. Or any other topics that have nothing to do with cooperatives but become timeline hogs.

For example, I might enjoy mocking certain politicians but do not need to toot about it in social.coop.

Michael Potter Fri 20 Jan 2023 7:32PM

The weakness of a non-zero sum outlook for interacting with others is that it doesn't work when dealing with a zero-sum person. So, if someone posts obvious disinformation, and we do nothing, we may think we're being enlightened, but we're actually approving of very destructive content spreading through our service. People should be offered more for reporting disinformation than a talk about conflict resolution (especially when it's been tried and failed) and a tall glass of "shut up."

My stance is that there are verifiable, reliable sources of information and only disinformation itself calls this into question. In fact, the purpose of disinformation is to call reality into question to make it easier to spread lies. So I post again a link that I like to use when investigating sources:

https://realityteam.org/resources/credible-sources/

I propose that we alter the CoC to include a simple procedure that does not waste time. If a post includes a known conspiracy theory it should have an immediate mark of sensitive. Same with something that can be debunked at a place like Snopes or FactCheck. If the person won't delete or modify, keeps reposting deleted things, etc, then suspend them. There may be cases where a lesser freeze/limit are appropriate, but probably not.

Aaron Wolf Fri 20 Jan 2023 7:45PM

if someone posts obvious disinformation, and we do nothing

Well, doing nothing is not something I support or have advocated for in the slightest. Do note that a conflict-resolution process is not nothing and it doesn't help to attack it as such. Also, I have said all along that conflict-resolution should start after immediate harms are stopped. So, temporary blocking or hiding of some sort is often necessary in order to deal with emerging issues as a prerequisite to doing any conflict-resolution.

I propose that we alter the CoC…[snip]… If a post includes a known conspiracy theory it should have an immediate mark of sensitive. Same with something that can be debunked at a place like Snopes or FactCheck. If the person won't delete or modify, keeps reposting deleted things, etc, then suspend them. There may be cases where a lesser freeze/limit are appropriate,

but probably not.

With my tiny edits, I fully support this proposal.

I would maybe more generically say that suspension would be appropriate for any form of not cooperating with the CWG and the process. Though I would hope that there is a healthy appeal process to have some check that the CWG is being fair and diligent. Any appeal process must be set up to not just be costly and abused.

Ana Ulin Fri 20 Jan 2023 8:22PM

I do not believe that social.coop was "originally created for social conversations about cooperatives". From https://wiki.social.coop/home.html: "Social.coop was founded in 2017 in the wake of the BuyTwitter campaign. Since then, we have worked toward the goal of placing ever more or our online social lives under good cooperative governance."

Since its inception, social.coop has attracted folks interested in the cooperative space, and during the last couple of community strategy discussions there has been explicit interest in fostering more collaboration and communication about cooperativism, but that still does not make social.coop a space solely for conversations about cooperativism.

If non-coop content became discouraged on social.coop, I would probably shut down my account, as I rarely post about coop stuff (I think cat pictures are probably the bulk of my contributions to the timeline).

Scott McGerik Fri 20 Jan 2023 8:33PM

Agreed. I did not join social.coop because it is about discussing cooperatives. I joined because I am interested in participitating in and operating in an environment of cooperative governance.

Michael Potter Fri 20 Jan 2023 9:04PM

I don't mean that conflict resolution is not valid or workable, just that in some cases, it's not going to work. If someone told me that a post of mine offended them, I'd be open to altering it or deleting it if they seemed sincere.

Bob Haugen Fri 20 Jan 2023 9:04PM

That's a good addition or correction to what I wrote above.

Bob Haugen Fri 20 Jan 2023 9:12PM

I wrote

social.coop, which was originally created for social conversations about cooperatives.

which @Ana Ulin and @Scott McGerik and maybe some other people say was wrong. They are correct, I was wrong. What's the best way to erase the false impression? I don't see a way to edit my original post. So best I see to do is add corrective comments.

Sam Whited Fri 20 Jan 2023 9:23PM

No big deal, it's a simple misunderstanding that has been corrected :)

Scott McGerik Fri 20 Jan 2023 9:30PM

Your post is sufficient! Thank you.

Scott McGerik Fri 20 Jan 2023 9:32PM

In a way, it was a good way to clarify what social.coop is about.

Scott McGerik Sat 21 Jan 2023 1:43AM

Stupid fat fingers. Gonna write this off line and then post it.

Scott McGerik Sat 21 Jan 2023 1:48AM

Until your post, I had not heard of consciousness raising. A search on the internet revealed https://en.wikipedia.org/wiki/Consciousness_raising on Wikipedia.

Stephanie Jo Kent Sat 21 Jan 2023 3:43PM

Echoing @Ana Ulin and @Scott McGerik that I joined to participate in "the goal of placing ever more or our online social lives under good cooperative governance." Truly appreciate the quality of communication here (in Loomio), and very much value and am grateful to all of you who consistently keep up and re-cultivate the spirit of collaboration.

Proposed addition to CoC prohibitions for harmful content

proposal by Will Murphy Closed Tue 24 Jan 2023 7:02PM

Not broadly supported. Withdrawn

As the goal of this thread is to propose amendments to the CoC, and several members expressed agreement with a comment suggesting this, I'd like to check if we're ready to start working on one such proposal. This is not a binding proposal, but a sense check that would lead to a formal proposal after updating with feedback.

Addition to section 4 of the Code of Conduct, Unacceptable Behavior:

[I will not] post content that is harmful to Social Coop community or to the community's standing within the broader fediverse

Results

| Results | Option | Votes | % of votes cast | % of eligible voters | |

|---|---|---|---|---|---|

|

|

Looks good | 10 | 37 | 11 |

|

| Not sure yet | 3 | 11 | 3 |

|

|

| Concerned | 14 | 52 | 15 |

|

|

| Undecided | 68 | 72 |

|

27 of 95 votes cast (28% participation)

Aaron Wolf

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

I support amending the CoC and specifically to mention disinformation and cooperation with CWG process (maybe there mentioning CWG's responsibility to block other harms when urgent, using their best judgment)

I'm concerned the proposal as is doesn't say anything about how "harmful" is to be interpreted. I imagine worst case outcomes including scaring away members who worry about being subjected to arbitrary opinions of "harmful" and exacerbating long debates about what counts as "harmful".

Scott McGerik

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

I agree with @Aaron Wolf's concern regarding how "harmful is to be interpreted" but I believe that can be addressed in a separate discussion but I believe there is a need for something like the proposed addition.

Matthew Slater

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

'Harmful' needs definition, especially since physical harm is not involved. Its a terrible guideline which adds nothing to existing guidelines. My suggestion of 'hateful' seems much clearer and BTW I left this group 5 days ago, but Loomio still invited me to vote.

I'm hurt at how, above, and in my absence, the portrayal of my behaviour continues to decline. @Sam Whited I have only ever been polite and curious, and never disrespected any other member, especially not a moderator.

Michael Potter

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

This is so vague as to be meaningless, and I didn't see several people agree with it. Several people DID agree that disinformation should be specifically dealt with in the CoC, and the comment was added that some provision for loopholes might be a good idea.

Steve Bosserman

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

I agree there needs to be an amendment to the CoC that clarifies "unacceptable behavior" and the consequences for a member who exhibits it. As others have already stated, how we word the amendment will make all the difference in how others interpret and act upon it. But I have confidence in the collective wisdom of this group to figure it out so it has the desired effect.

Sam Whited

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

Something along these lines seems like a good addition; there's a thing the trolls do (or those who know their behavior is bad but want to keep doing it) where they pretend that because the CoC or other governing documents doesn't explicitly state something it means it can't be moderated, but a well written CoC gives the community latitude and flexibility to change standards with the times and not have to make new changes to it every time some new behavior surfaces.

Lynn Foster

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

Concern: This is so broad that it is impossible for members to know what it is; and will be very difficult to use to moderate without a lot of difficulty and possibly personal arbitrariness. I do think we need to agree on what "harm" means. And be vary careful with "disinformation", which is often used to mean "I disagree because I have an agenda, or I automatically agree with the mainstream media's or a political party's or the government's statements, even if I haven't researched it myself".

Andrew Shead

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

"Harmful" isn't specific enough; it encompasses what Section-4 of the CoC says already and doesn't add anything. Isn't it the collective responsibility of community individuals to Mute or Block content they find offensive??? With enough Ms&Bs, bad content would be shunted into the void. Can the CWG then banish a user who has high levels of Ms&Bs? In this way the community is dynamically voting against a bad user, which makes the CWG's life easier and less complicated.

Nic

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

We could set up a second instance to discus indefinitely what harmful means..

But maybe we need to collaboratively develop policies for specific issues? Eg health info, current affairs or war reporting.

For health, posts outside mainstream consensus seem to need some kind of peer-reviewed science they point to. It can't be enough to say 'chocolate cures cancer': point to the study, or prefix 'I believe'.

RE disinfo, @mpotter's proposal above banning known conspiracy theories? sounds good.

Andrew Shead Sun 22 Jan 2023 3:52PM

As members of a co-operative, we each have one vote and a duty of care to exercise that vote. Beyond the CoC, regulating individual behaviour is a collective responsibility. We each are our own authority and different from other individuals, what is offensive to one person is mildly irritating to another. When a reader of a post to social.coop finds the post to be offensive or affronting or otherwise violating the CoC then the first thing for the reader to do is to Mute the author. If the author becomes obnoxious then the next step for the offended person is to Block the author.

In this way, the membership of the co-operative quietly exercises its voting power against undesirable behaviour, without immediately involving the CWG. Ultimately, the community will sequester obnoxious behaviour by its collective voting power through the sanctions of Mute and Block.

When the number of Mutes and Blocks against an author rises to an appropriate level, the CWG can then intervene to banish the obnoxious author or instance, without getting involved in an argument about what’s good or bad.

So, perhaps the CoC needs language that says community members must actively engage in moderation by first Muting or later Blocking posts they find offensive.

Aaron Wolf Sun 22 Jan 2023 4:14PM

I agree about collective responsibility. I strongly disagree about muting and blocking as the recommended defaults. They are options people should feel free to use, but they do not help anyone learn, do not resolve any misunderstandings…

What you're suggesting easily looks like a poster not even knowing they were being misunderstood or offending anyone until the CWG comes along and tells them they are on the verge of being kicked out of the co-op. This is dysfunctional and goes against everything that restorative justice and co-op principles stand for.

We need resolution mechanisms that include some place for assumptions of good faith, for people to know that people are reacting negatively, to get feedback, to learn…

Also, we need mechanisms where harmful posts can be muted more quickly for everyone. It is not okay for me to see some hateful post and then just mute/block for myself and leave it to everyone else to have to see the harmful post. The first people who see a harmful post should have a way to reduce the entire co-op's exposure as quickly as possible. Then restorative process focusing on learning could be done afterwards. And people joining the co-op should understand this so they aren't shocked or scared, so they know that if they get moderated in some way it doesn't mean their co-op standing is threatened. Any public debate about problem posts is liable to cause more defensiveness and escalate things. We should be stronger in our pro-active moderation and hiding of problem posts and more gracious and patient with resolution and learning.

https://wiki-dev.social.coop/Conflict_resolution_guide tries to go in a healthy direction.

In short: YES, the enforcement mechanism and the co-op onboarding process needs to make it clear that we are all responsible for responding to problems. Our response options should include ways to more quickly hide problems from everyone and should emphasize restorative resolutions after that. Just adding up blocks and mutes on the way to bans is not restorative at all.

Andrew Shead Sun 22 Jan 2023 4:21PM

Thank you, that's something I hadn't considered.

Nathan Schneider

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

This is far too vague, and vague prohibitions are ripe for abuse. As a member I would have no idea how to interpret that, and could therefore veer in the direction of excessive self-censorship. I encourage a new proposal, if necessary, that is far more precise, communicative, and enforceable.

Zee Spencer

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

To be frank, I believe this is not a "rules" problem, but rather a social immaturity problem. I think specifying that we do not want people to engage in disinformation or otherwise harmful is reasonable to include as a thing to 'point to' if moderators need a rule to fall-back on; but I would prefer we proactively removed/blocked people who "toe the line." For people who are threatened by vagueness they should err on the side of "post, but be ready to edit or delete at moderators request."

Graham

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

My concern is that it might be really difficult/impossible to develop an agreed definition of harmful content.

Alex Rodriguez

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

Changed my vote after reading some of the other concerns, especially re: having something more specific and also something that emphasises collective responsibilities and steps that can be taken

Stephanie Jo Kent Mon 23 Jan 2023 3:40PM

There is something in @Andrew Shead's idea about metrics regarding who gets Muted or Blocked, and as well with @Aaron Wolf 's point that no one should be suddenly surprised or shocked by a CWG message about posting content or behavior. "Harmful" is tricky since that's very much defined by the "receiver" - what hurts me may not hurt you. But I'm not in support of a blanket "mainstream consensus" as @Nic Wistreich suggested - there's much that is presumably common sense or mainstream that is in desperate need of challenge and change. Patterns of communicative behaviors that seek understanding and perspective are preferable to those that seek to rile folks up, yet sarcasm and dark humor are tools in service of necessary change, too. Is there a way (or need) to better describe the characteristics of patterns of posting that could be described in the CWG? Too much in the weeds?

Noah

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

I agree with the concern that "harmful" is too open to interpretation - especially "within the broader fediverse" - there is a seed of something very legitimate in there, but as written it could be interpreted in very adverse ways. There are huge swathes of the fediverse which we absolutely do not need to be in good standing with!

Brian Vaughan Mon 23 Jan 2023 4:36PM

While it's good to try to be as clear as possible, there's a limit to what can be accomplished with formal rules, especially when dealing with malicious people who go to great lengths to subvert the intent of formal rules. I think we should rely on the moderation team acting on its best judgment of the intent of the membership in general, under the supervision of the membership in general.

Dmitri Z.

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

I agree with the 'this is too vague' / 'repeats existing policy' concerns. At the very least, let's add a few more words to this clause, explaining the procedures of how 'harmful' will be determined.

Doug Belshaw Mon 23 Jan 2023 6:06PM

This statement is too vague and needs to talk about categories of harm. Also, people who have been kicked off social.coop, or have left before being pushed, should no longer have Loomio access IMHO.

J. Nathan Matias

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

A good general principle when creating new policies is to be clear about what those policies mean, and I think "harm" is too vague a formulation. I would be happy to contribute to a conversation about what we might mean by harm. I would be open to approving a change to policies to include harm, so long as we would agree to implement those policies only after defining harms in greater detail.

Proposal: add "cooperation with CWG" to the CoC

proposal by Aaron Wolf Closed Mon 30 Jan 2023 7:01PM

Hi all, we have an apparently successful proposal! Zero votes against, one abstention. More than 18% of co-op members with Loomio accounts voted. More turnout would be ideal, but this is pretty clear support.

As this is specifically about and relevant to the Community Working Group, it seems appropriate to me to ask them to confirm and make the official change to the Code of Conduct. By my best understanding, of the 5 CWG members, only 1 voted in the poll. I assume the others also will support the change.

Here is the final version of the proposal, given some amendments after initial feedback:

Amend the Code of Conduct as shown at https://wiki-dev.social.coop/Code_of_conduct:

Expand item 6 "Reporting" to be renamed "Reporting and Resolving"

Within item 6, add a new line: "If the Community Working Group (CWG) contacts me about my role in any conduct complaint, I will cooperate with their process and guidance, communicating respectfully and working with them to make clear agreements for resolving the situation."

Note that this proposal can and will be edited if there are suggestions that do not change the basic gist.

Proposal for amending the Code of Conduct as shown at https://wiki-dev.social.coop/Code_of_conduct:

Expand item 6 "Reporting" to be renamed "Reporting and Resolving"

Within item 6, add a new line: "If the Community Working Group (CWG) contacts me about my role in any conduct complaint, I will cooperate with their process and guidance, communicating respectfully and working with them to make clear agreements for resolving the situation."

UPDATE EDITS: removed "fully" from "fully cooperate", added further clause to clarify meaning

Note: We might like to also include reference to appropriate appeals process, but we need to define that itself before we can include mention of it in the CoC.

Note: Further updates about disinformation and updates to the CWG process can be made as well. For this poll, the question is whether the proposal above is good enough and safe enough to be helpful as a positive step on its own.

Results

| Results | Option | Votes | % of votes cast | % of eligible voters | |

|---|---|---|---|---|---|

|

|

Agree | 51 | 98 | 16 |

|

| Abstain | 1 | 2 | 0 |

|

|

| Disagree | 0 | 0 | 0 | ||

| Undecided | 275 | 84 |

|

52 of 327 votes cast (15% participation)

Michael Potter

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

I hadn't read the specific line carefully before, when I disagreed. I like this, because it gives grounds for escalation in these cases of re-posting content, ignoring or even abusing moderators.

Blake Johnson

Mon 23 Jan 2023 7:39PM

Mon 23 Jan 2023 7:39PM

While I'm sympathetic to the basis of bringing this forward, I think the wording is overly broad.

Eduardo Mercovich

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

Seems simple and ok to me.

Christine

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

This is too vague for users to understand what is and isn't permitted

Alex Rodriguez

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

Additional clarity in the update works for me

Scott McGerik

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

The proposed amendment should, hopefully, make it clear that uncooperative behavior is not accepted.

Andrew Shead

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

Seems OK, but the concerns of @arod make me wonder about the wording.

Aaron Wolf Tue 24 Jan 2023 3:54AM

In response to @Alex Rodriguez helpful points and questions: I did not want to mean "comply" as that gives me a sense of unidirectional orders. My suggestion of "cooperate" means to be in constructive communication, hearing what the CWG is saying, responding, making agreements, and so on. Examples of not cooperating would be ignoring, responding with defensive aggression such as denial or sarcasm, making a public stink, reposting flagged content, and so on.

As I am not on the CWG, I'm not quite on top of the current process. I imagine their process will continue evolving and improving and getting better documented over time. Whatever the status, the co-op has elected them to do the work within the bounds of the agreed policies. So, whatever their process, that's what it refers to. If their process has problems, that's a separate issue to fix outside of the CoC. I did/do have some worries about worst case scenarios where the process has problems, and for that I really want to see a solid appeal process. I just don't think that's a blocker to updating the CoC for now.

As to "guidance", I was imagining that the CWG might make specific suggestions in their communication with people. Cooperating with guidance I imagine as hearing it and telling the CWG whether the person agrees or has concerns, and if they don't agree, they discuss that with the CWG to get to agreement rather than disregard the suggestions. "Guidance" is different than process because process would be the steps they take in communicating (such as keeping things private, being patient in waiting for replies, etc.), removing posts, adjusting privileges or similar.

Note that I carefully wrote "my role" in a conduct complaint because I imagine this cooperate-with-CWG line to apply to everyone, including the people complaining or people who are tangential. For example, even if I was only part of an argument where someone else's post was reported by a third person, I still have responsibility to cooperate if the CWG asks for my involvement or input in resolving the issue.

If having clarified this, anyone disagrees or has questions or suggestions for editing the proposal, please share your thoughts.

JohnKuti

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

Moderators have a really tough job as technology creates increasingly toxic online environments. The advantage of social.coop ought to be the involvement of the whole community (via the CWG) to intervene. I think that is the idea here.

JohnKuti

Sat 21 Jan 2023 7:17PM

Sat 21 Jan 2023 7:17PM

I'd like to see something positive rather than a prohibition. An obligation to respect the common culture of the site and promote cooperativist principles.

Tom Resing

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

If it helps with moderation, it's good with me. I disagree with @arod's points on the language. You can cooperate with guidance. And you should! :)

Brecht Savelkoul

Tue 24 Jan 2023 8:18AM

Tue 24 Jan 2023 8:18AM

While I agree with the general principle, the current phrasing is so vague that I could be used in pretty much any context. So I fear including this in its current state will create more problems than it resolves.

Brecht Savelkoul

Mon 23 Jan 2023 7:24PM

Mon 23 Jan 2023 7:24PM

This looks reasonable and useful to me. To address @Alex Rodriguez 's worries, maybe we just need clarify what the minimum standard for "cooperating" is. I'd say "acknowledging and responding to complaints voiced by the moderators" would be the lowest bar to consider someone as cooperating with the process.

Graham